[日本の方へ:読者が日本語版を翻訳してくださいました。ご参照してください。]

I run a small software business in central Japan. Over the years, I’ve worked both in the local Japanese government (as a translator) and in Japanese industry (as a systems engineer), and have some minor knowledge of how things are done here. English-language reporting on the matter has been so bad that my mother is worried for my safety, so in the interests of clearing the air I thought I would write up a bit of what I know.

A Quick Primer On Japanese Geography

Japan is an archipelago made up of many islands, of which there are four main ones: Honshu, Shikoku, Hokkaido, and Kyushu. The one that almost everybody outside of the country will think of when they think “Japan” is Honshu: in addition to housing Tokyo, Nagoya, Osaka, Kyoto, and virtually every other city that foreigners have heard of, it has most of Japan’s population and economic base. Honshu is the big island that looks like a banana on your globe, and was directly affected by the earthquake and tsunami…

… to an extent, anyway. See, the thing that people don’t realize is that Honshu is massive. It is larger than Great Britain. (A country which does not typically refer to itself as a “tiny island nation.”) At about 800 miles long, it stretches from roughly Chicago to New Orleans. Quite a lot of the reporting on Japan, including that which is scaring the heck out of my friends and family, is the equivalent of someone ringing up Mayor Daley during Katrina and saying “My God man, that’s terrible — how are you coping?”

The public perception of Japan, at home and abroad, is disproportionately influenced by Tokyo’s outsized contribution to Japanese political, economic, and social life. It also gets more news coverage than warranted because one could poll every journalist in North America and not find one single soul who could put Miyagi or Gifu on a map. So let’s get this out of the way: Tokyo, like virtually the whole island of Honshu, got a bit shaken and no major damage was done. They have reported 1 fatality caused by the earthquake. By comparison, on any given Friday, Tokyo will typically have more deaths caused by traffic accidents. (Tokyo is also massive.)

Miyagi is the prefecture hardest hit by the tsunami, and Japanese TV is reporting that they expect fatalities in the prefecture to exceed 10,000. Miyagi is 200 miles from Tokyo. (Remember — Honshu is massive.) That’s about the distance between New York and Washington DC.

Japanese Disaster Preparedness

Japan is exceptionally well-prepared to deal with natural disasters: it has spent more on the problem than any other nation, largely as a result of frequently experiencing them. (Have you ever wondered why you use Japanese for “tsunamis” and “typhoons”?) All levels of the government, from the Self Defense Forces to technical translators working at prefectural technology incubators in places you’ve never heard of, spend quite a bit of time writing and drilling on what to do in the event of a disaster.

For your reference, as approximately the lowest person on the org chart for Ogaki City (it’s in Gifu, which is fairly close to Nagoya, which is 200 miles from Tokyo, which is 200 miles from Miyagi, which was severely affected by the earthquake), my duties in the event of a disaster were:

- Ascertain my personal safety.

- Report to the next person on the phone tree for my office, which we drilled once a year.

- Await mobalization in case response efforts required English or Spanish translation.

Ogaki has approximately 150,000 people. The city’s disaster preparedness plan lists exactly how many come from English-speaking countries. It is less than two dozen. Why have a maintained list of English translators at the ready? Because Japanese does not have a word for excessive preparation.

Another anecdote: I previously worked as a systems engineer for a large computer consultancy, primarily in making back office systems for Japanese universities. One such system is called a portal: it lets students check on, e.g., their class schedule from their cell phones.

The first feature of the portal, printed in bold red ink and obsessively tested, was called Emergency Notification. Basically, we were worried about you attempting to check your class schedule while there was a wall of water coming to inundate your campus, so we built in the capability to take over all pages and say, essentially, “Forget about class. Get to shelter now.”

Many of our clients are in the general vicinity of Tokyo. When Nagoya (again, same island but very far away) started shaking during the earthquake, here’s what happened:

- T-0 seconds: Oh dear, we’re shaking.

- T+5 seconds: Where was that earthquake?

- T+15 seconds: The government reports that we just had a magnitude 8.8 earthquake off the coast of East Japan. Which clients of ours are implicated?

- T+30 seconds: Two or three engineers in the office start saying “I’m the senior engineer responsible for X, Y, and Z universities.”

- T+45 seconds: “I am unable to reach X University’s emergency contact on the phone. Retrying.” (Phones were inundated virtually instantly.)

- T+60 seconds: “I am unable to reach X University’s emergency contact on the phone. I am declaring an emergency for X University. I am now going to follow the X University Emergency Checklist.”

- T+90 seconds: “I have activated emergency systems for X University remotely. Confirm activation of emergency systems.”

- T+95 seconds: (second most senior engineer) “I confirm activation of emergency systems for X University.”

- T+120 seconds: (manager of group) “Confirming emergency system activations, sound off: X University.” “Systems activated.” “Confirmed systems activated.” “Y University.” “Systems activated.” “Confirmed systems activated.” …

While this is happening, it’s somebody else’s job to confirm the safety of the colleagues of these engineers, at least a few of whom are out of the office at client sites. Their checklist helpfully notes that confirmation of the safety of engineers should be done by visual inspection first, because they’ll be really effing busy for the next few minutes.

So that’s the view of the disaster from the perspective of a wee little office several hundred miles away, responsible for a system which, in the scheme of things, was of very, very minor importance.

Scenes like this started playing out up and down Japan within, literally, seconds of the quake.

When the mall I was in started shaking, I at first thought it was because it was a windy day (Japanese buildings are designed to shake because the alternative is to be designed to fail catastrophically in the event of an earthquake), until I looked out the window and saw the train station. A train pulling out of the station had hit the emergency breaks and was stopped within 20 feet — again, just someone doing what he was trained for. A few seconds after the train stopped, after reporting his status, he would have gotten on the loudspeakers and apologized for inconvenience caused by the earthquake. (Seriously, it’s in the manual.)

Everything Pretty Much Worked

Let’s talk about trains for a second. Four One of them were washed away by the tsunami. All Japanese trains survived the tsunami without incident. [Edited to add: Initial reports were incorrect. Contact was initially lost with 5 trains, but all passengers and crew were rescued. See here, in Japanese.] All of the rest — including ones travelling in excess of 150 miles per hour — made immediate emergency stops and no one died. There were no derailments. There were no collisions. There was no loss of control. The story of Japanese railways during the earthquake and tsunami is the story of an unceasing drumbeat of everything going right.

This was largely the story up and down Honshu. Planes stayed in the sky. Buildings stayed standing. Civil order continued uninterrupted.

On the train line between Ogaki and Nagoya, one passes dozens of factories, including notably a beer distillery which holds beer in pressure tanks painted to look like gigantic beer bottles. Many of these factories have large amounts of extraordinarily dangerous chemicals maintained, at all times, in conditions which would resemble fuel-air bombs if they had a trigger attached to them. None of them blew up. There was a handful of very photogenic failures out east, which is an occupational hazard of dealing with large quantities of things that have a strongly adversarial response to materials like oxygen, water, and chemists. We’re not going to stop doing that because modern civilization and it’s luxuries like cars, medicine, and food are dependent on industry.

The overwhelming response of Japanese engineering to the challenge posed by an earthquake larger than any in the last century was to function exactly as designed. Millions of people are alive right now because the system worked and the system worked and the system worked.

That this happened was, I say with no hint of exaggeration, one of the triumphs of human civilization. Every engineer in this country should be walking a little taller this week. We can’t say that too loudly, because it would be inappropriate with folks still missing and many families in mourning, but it doesn’t make it any less true.

Let’s Talk Nukes

There is currently a lot of panicked reporting about the problems with two of Tokyo Electric’s nuclear power generation plants in Fukushima. Although few people would admit this out loud, I think it would be fair to include these in the count of systems which functioned exactly as designed. For more detail on this from someone who knows nuclear power generation, which rules out him being a reporter, see here.

- The instant response — scramming the reactors — happened exactly as planned and, instantly, removed the Apocalyptic Nightmare Scenarios from the table.

- There were some failures of important systems, mostly related to cooling the reactor cores to prevent a meltdown. To be clear, a meltdown is not an Apocalyptic Nightmare Scenario: the entire plant is designed such that when everything else fails, the worst thing that happens is somebody gets a cleanup bill with a whole lot of zeroes in it.

- Failure of the systems is contemplated in their design, which is why there are so many redundant ones. You won’t even hear about most of the failures up and down the country because a) they weren’t nuclear related (a keyword which scares the heck out of some people) and b) redundant systems caught them.

- The tremendous public unease over nuclear power shouldn’t be allowed to overpower the conclusion: nuclear energy, in all the years leading to the crisis and continuing during it, is absurdly safe. Remember the talk about the trains and how they did exactly what they were supposed to do within seconds? Several hundred people still drowned on the trains. That is a tragedy, but every person connected with the design and operation of the railways should be justifiably proud that that was the worst thing that happened. At present, in terms of radiation risk, the tsunami appears to be a wash: on the one hand there’s a near nuclear meltdown, on the other hand the tsunami disrupted something really dangerous: international flights. (One does not ordinarily associate flying commercial airlines with elevated radiation risks. Then again, one doesn’t normally associate eating bananas with it, either. When you hear news reports of people exposed to radiation, keep in mind, at the moment we’re talking a level of severity somewhere between “ate a banana” and “carries a Delta Skymiles platinum membership card”.)

What You Can Do

Far and away the worst thing that happened in the earthquake was that a lot of people drowned. Your thoughts and prayers for them and their families are appreciated. This is terrible, and we’ll learn ways to better avoid it in the future, but considering the magnitude of the disaster we got off relatively lightly. (An earlier draft of this post said “lucky.” I have since reworded because, honestly, screw luck. Luck had absolutely nothing to do with it. Decades of good engineering, planning, and following the bloody checklist are why this was a serious disaster and not a nation-ending catastrophe like it would have been in many, many other places.)

Japan’s economy just got a serious monkey wrench thrown into it, but it will be back up to speed fairly quickly. (By comparison, it was probably more hurt by either the Leiman Shock or the decision to invent a safety crisis to help out the US auto industry. By the way, wondering what you can do for Japan? Take whatever you’re saying currently about “We’re all Japanese”, hold onto it for a few years, and copy it into a strongly worded letter to your local Congresscritter the next time nativism runs rampant.)

A few friends of mine have suggested coming to Japan to pitch in with the recovery efforts. I appreciate your willingness to brave the radiological dangers of international travel on our behalf, but that plan has little upside to it: when you get here, you’re going to be a) illiterate b) unable to understand instructions and c) a productivity drag on people who are quite capable of dealing with this but will instead have to play Babysit The Foreigner. If you’re feeling compassionate and want to do something for the sake of doing something, find a charity in your neighborhood. Give it money. Tell them you were motivated to by Japan’s current predicament. You’ll be happy, Japan will recover quickly, and your local charity will appreciate your kindness.

On behalf of myself and the other folks in our community, thank you for your kindness and support.

[本投稿を日本語にすると思っておりますが、より早くできる方がいましたら、ご自由にどうぞ。翻訳を含めて二次的著作物を許可いたします。詳細はこちらまで。

This post is released under a Creative Commons license. I intend to translate it into Japanese over the next few days, but if you want to translate it or otherwise use it, please feel free.]

[Edit: Due to overwhelming volume and a poor signal-to-noise ratio, I am closing comments on this post, but I encourage you to blog about it if you feel strongly about something.]

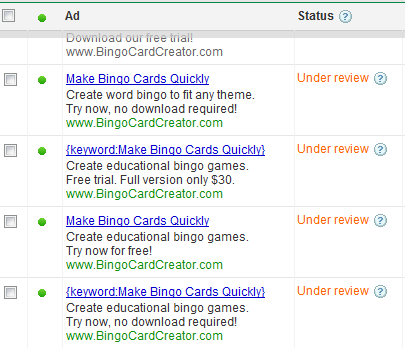

After filling in everything, I hit Submit expecting to be taken to a page which had an “OK, now actually tell us what the problem” comment box was. No need — it has been optimized away! Google doesn’t even want that much interaction. (The last time I went through this — sometime last year — I recall there being a freeform field, limited to 512 characters or so. I always use it to explain that I am not a gambling operation and if they want confirmation they can read the

After filling in everything, I hit Submit expecting to be taken to a page which had an “OK, now actually tell us what the problem” comment box was. No need — it has been optimized away! Google doesn’t even want that much interaction. (The last time I went through this — sometime last year — I recall there being a freeform field, limited to 512 characters or so. I always use it to explain that I am not a gambling operation and if they want confirmation they can read the

Recent Comments